Gaussians, Multivariate Gaussians and Halluciantions from such distributions

The curves given above are known as Gaussians. This post aims to discuss what they are, what they mean and to gain intuitive intuitions into what they signify. We shall further learn to hallucinate sample points from such curves and look at what likelihood means. These concepts are fundamental to machine learning and statistics in general.

Networks such as Generative Adversarial Networks(GANs) depend on these fundamental concepts and one shouldn’t dive into such networks without first understanding these concepts and gain an insight into randomness and information.

Let’s first simply look at Gaussians as a curve in mathematics, and then we will try to come to the concept of it being a probability distribution and infer what that means. The equation goes as follows :

where \( \mu \)= mean of all values of x and \(\sigma \)= the standard variance of all values of x. We shall show these later

Let us have a look at this curve and draw inferences on its nature.

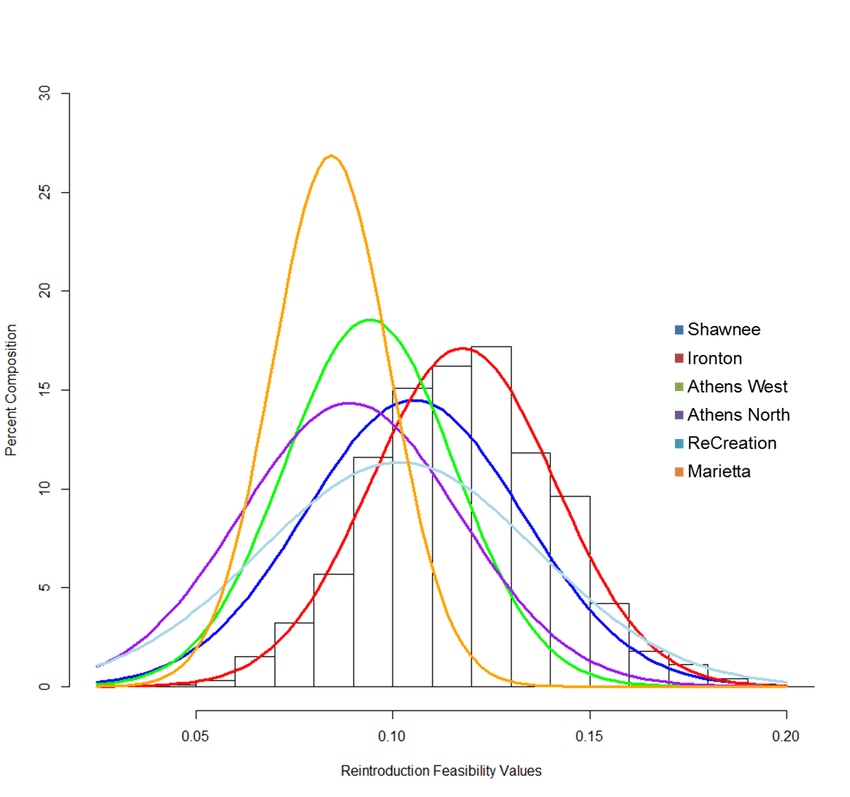

The highest point of this curve corresponds to \( x = \mu \) . We have taken a special case where \( \mu = 0 \) and \( \sigma = 1 \). In such a case, these are known as normalized Gaussians. Notice the cover figure of the post, the position of the different peaks are the means of those curves. \( \sigma \) tells us distribuited the data is away from the mean. So, the more spread out the curve, the higher is the value of \( \sigma \).

Note that . This means that the fucntion is a Probability Distribution Function. If we have a set of values of x that are Gaussian distributed, ie , then we mean to say that their occurence that a sample from x being some \( x_k\) follows the probability as per the curve depeinding on them mean of x \( \mu_x \) and the standard deviation \( \sigma_x \). \( \mu \) and \( \sigma \) are the only two parameters needed to get this distribution and hence they are together referred to as the sufficient statistics.

Looking at Machine learning from this point of view, we are all looking to develop models that find the “sufficient statistics” for realizing a function.

Simulation or Hallucination

From what has already been discussed, we should be able to infer the meaning of a distribution and its points. But, quite often in machine learning, we have to sample values from a given Gaussian distribution to get an array of certain dimensions. Eg: make an array of 100 numbers such that they are from the Gaussian given by mean=0 and standard deviation=0.2. This process of sampling is called Simulation or Hallucination.

It may be denoted as . To Understand this let us look as the Cumulative Distribution function which is as shown :

Graphically,

To sample from the Gaussian distribution, we will use the PDF, CDF and a random number generator(RNG). RNG functions are implemented in all popular programming languages. We will use a random number geneator $rng(x) \in [0,1]$. This follows a uniform distribution and all values are equiprobable.

Let us now have a look at the process.

For a single output of the RNG, say 0.65, we would project it on to the CDF and take the corresponding value of . Notice how a large portion of the rng numbers would project itself onto the linear section of the graph and thus be closer to the mean, as controlled by the variance. We are consistent with our PDF in this approach.

Expectation , Monte Carlo convergence and a reference to Information

The Expectation of a PDF refers to the most likely result of a single simulation of a PDF. For Gaussian distributions, this is easily visualizable from the graph that the mean is the expected value. if we encounter any random variable x, it is by the expectation that we define the mean.

The second equality to the summation os only valid for large values of in which case the sum converges to the integral for random variables. This is known as the Monte Carlo convergence.

Gaussians also have importance from the perspective of adding information to models. In the frequency domain, Gaussians are just a line parallel to the X-axis and are familiar to communication Engineers as AWGN. It does not discriminate but adds equally with all frequency components of a signal. Thus, in Generative models, the use of Gaussians further indicates the addition of the minimum possible bias to the model. This is further supported by the Central Limit Theorem according to which as the number of independent random variables added together tend to infinity, their distribution approaches a Gaussian.

Covariance and multivariate Gaussians

Covariance of two R.Vs X and Y tell us to what extent X and Y are Linearly related.

Note : and

The covariance matrix of a d-length vector where each feature is a random variable, on the other hand gives us an idea of how each feature is related with respect to the others. ie. for where each is a random variable, The covariance matrix is as shown,

It is denoted by . This matrix is symmetric in nature and knowing the upper triangular part or the lower triangular part would be sufficient to replicate the matrix entirely.

Let us define the multivariate Gaussian here and then go into a sanity or validity check. This is the gaussian function in higher dimensions (say d dimensions):

where each is a random variable and

To make sure that we have grasped the concept of sufficient statistics and a multivariate distribution, Let us take up where are two random variables with means but having the same variance.

, where and are samples from and .

We need the means and the covariance matrix as sufficient statistics.

- : 2 parameters

- .

Since this matrix is symmetric, we only need one of or : 3 parameters

So number of sufficient statistics is : 5.

Let us use this 2D distribution to have a look at the joint probability distribution. That is the probability of a sample of and a sample of occuring at the same time.

Let’s define the matrix notations. and are column vectors.

If and are independent their covariance is .

Let’s have a look at the determinant.

Now let’s explot the independence of and meaning

We see that \ref{final} is the same as the expression for multivariate Gaussians. This fundamental understanding is crucial to grasping the concepts of likelihood and Maximum Likelihood Estimation which shall be the next post in this Basics section.